Travel planning is one of the hardest tasks for AI.

AI-generated itineraries often look polished at first glance. The tone is confident. The structure feels logical. But once people try to follow the plan, problems appear. Routes zigzag across the city. Travel times do not add up. Restaurants are closed. Attractions do not exist or are no longer accessible.

These failures are not rare edge cases. They are structural problems. It combines constantly changing facts with spatial reasoning and complex decision-making processes.

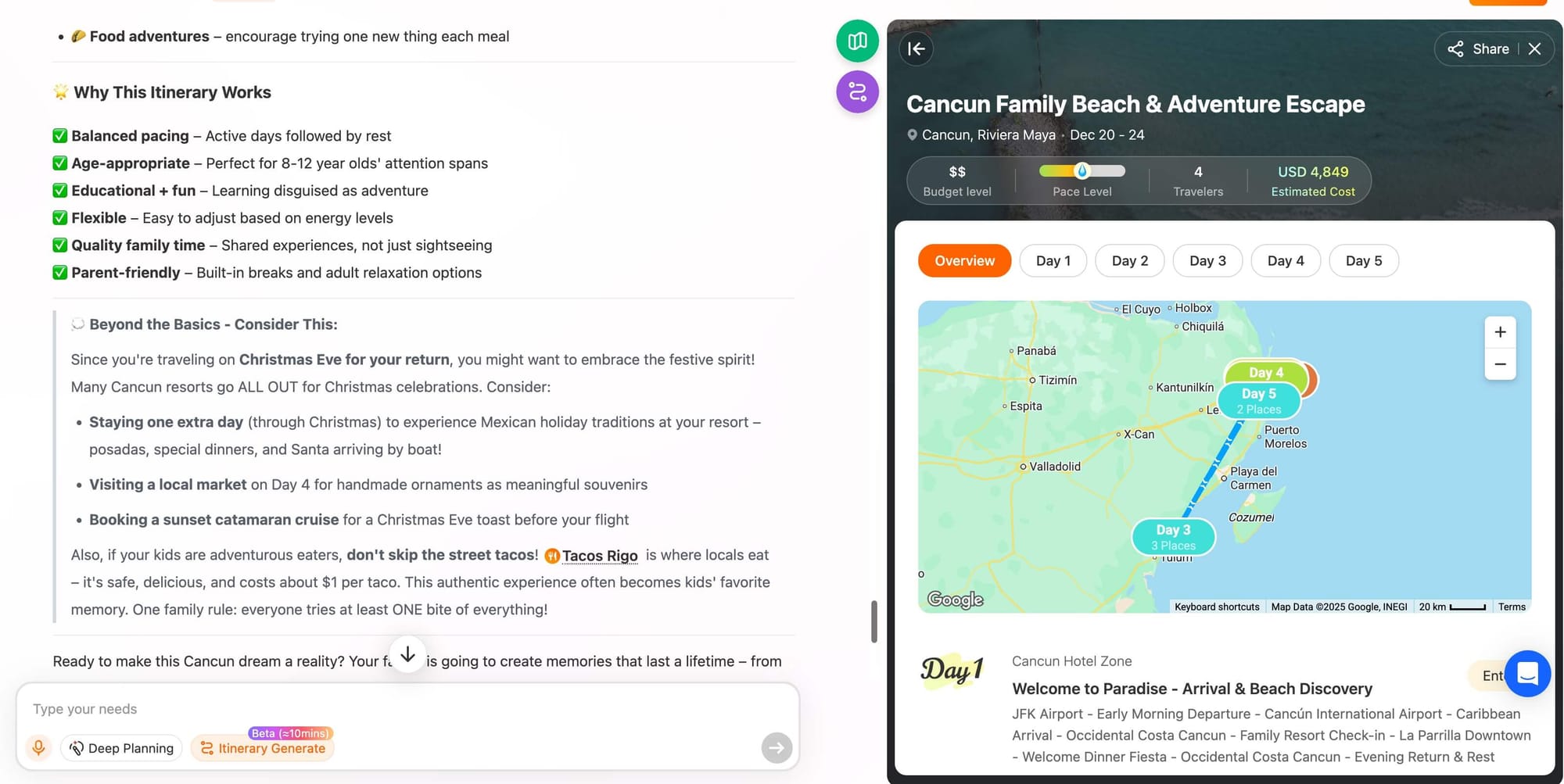

In this article, we explain why hallucinations are so common in AI trip planning and how we designed iMean AI to verify facts before it speaks.

Why Travel Planning Is a Hallucination Magnet

Grounding real-world facts is fundamentally difficult

Many AI tasks operate mainly in the world of language. Travel planning lives in the physical world.

Opening hours change without warning. Transport routes shift by season. Restaurants relocate or close quietly. Construction, strikes, and weather events disrupt plans overnight. This information is fragmented across official websites, maps, local reviews, and community updates.

Large language models(LLMs) do not verify facts on their own. If real-time data is missing, they infer based on patterns learned during training. When that happens, the system does not signal uncertainty. It produces a fluent answer.

For travel, this behavior is dangerous. A confident guess is often worse than no answer at all.

Travel planning requires long-chain reasoning

Planning a trip is not about listing places. It is about sequencing decisions over time and space.

The system must understand user intent. It must reason about geography. It must choose transportation modes. It must estimate time realistically. It must balance pace and energy across days.

Each step depends on the previous ones. If a single assumption is wrong, the rest of the plan degrades quickly. This type of long-chain reasoning remains a weak point for general-purpose language models.

Confidence hides uncertainty

Language models are optimized to sound natural. Even when information is incomplete, the response often appears certain.

For users, this creates a false sense of trust. The itinerary looks finished. There is no visible sign that key facts were never checked.

The result is a plan that fails quietly and late.

Where Large Language Models Fail in Itinerary Planning

Grounding failures are common

Without active verification, AI frequently recommends places that are outdated or inaccurate.

Suggesting attractions that closed years ago, recommending seasonal activities at the wrong time or listing flights or transport options that no longer exist...They are the mistakes AI might make unfortunately.

These are not subtle errors. They are basic factual mistakes caused by missing or stale information.

Reasoning chains break under complexity

As itineraries grow longer, errors compound.

The system loses track of distance. Routes backtrack unnecessarily. Days become overloaded. Travel time is ignored or underestimated. The plan still reads well, but it no longer reflects reality.

Long itineraries expose the limits of text-based generation.

Prediction is not execution

Most language models generate output by predicting the next token. This works well for prose. It does not work well for planning.

A plan requires structure. It requires constraints. It requires validation. Without these elements, the model produces text that resembles a plan but does not behave like one.

Fluency and correctness are not the same thing.

From Guessing to Verifying

When we began building iMean AI, we made a simple decision. The system should not guess.

Every recommendation must be tied to verifiable information. Every decision must follow a clear process. The AI should retrieve facts, reason with structure, and validate results before responding.

This principle became the foundation of the system.

Why iMean AI Produces Fewer Hallucinations Than Typical AI Trip Planners

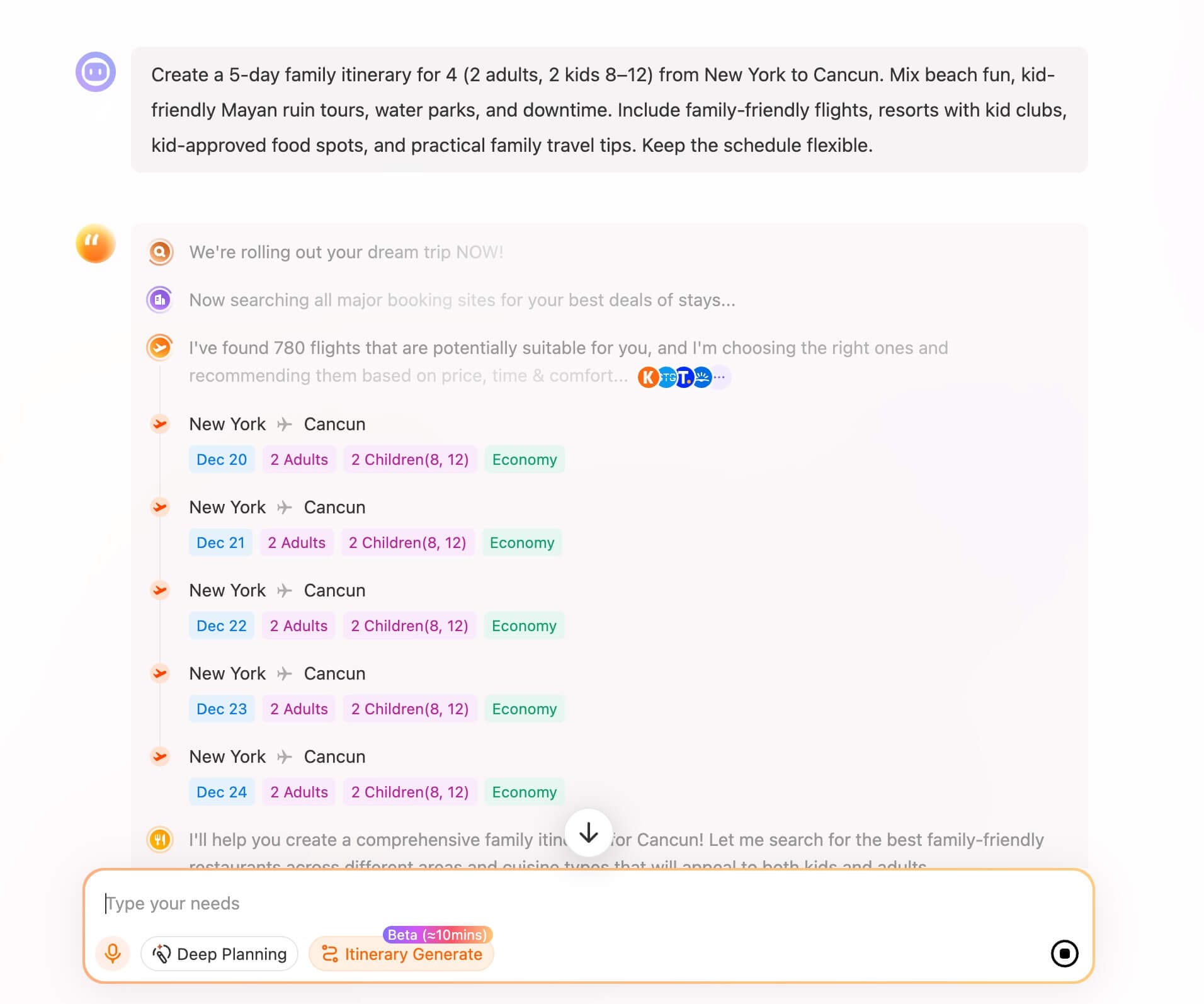

Many AI trip planners rely almost entirely on large language models. They prompt the model well and hope the output stays reasonable. This approach works for short answers. It fails when the task becomes complex and grounded in the real world.iMean AI follows a different path. From the beginning, our goal was not to make the model write better itineraries. It was to make the system verify reality before generating anything.

This difference shows up in four core design choices.

Clarifying intent before planning

Travel intent is often vague and sometimes contradictory.

People want relaxed trips but full schedules. They want hidden spots and famous landmarks. They want efficiency without feeling rushed.

Before generating an itinerary, the system clarifies intent. Budget, pace, preferences, and constraints are made explicit. This step reduces misinterpretation and prevents downstream errors.

Web agents as the system foundation

iMean AI did not start as a travel product. It started as a web agent system.

This matters because it shaped the entire architecture. Instead of treating the web as an optional context, the system treats it as a primary source of truth.

Before suggesting a place, the AI can check official websites for opening hours and ticket rules. It can verify addresses using mapping services. It can compare information across multiple sources, including official listings and community platforms.

This process reduces errors that come from outdated or incomplete training data. The model is no longer forced to guess. It can look things up.

Most hallucinations in travel planning are not subtle reasoning mistakes. They are basic factual errors. Web-level verification removes a large portion of them.

Multi-source knowledge instead of single model memory

iMean AI agent does not rely on a single model to remember everything.

The system combines several layers of information. This includes internal knowledge bases, external APIs, and real-time web retrieval. Each layer covers different types of data and failure cases.

When one source is incomplete, others can fill the gap. When sources conflict, the system can cross-check rather than invent an answer.

This multi-source approach is slower than pure generation. It is also far more reliable. The AI is grounded in evidence rather than pattern matching.

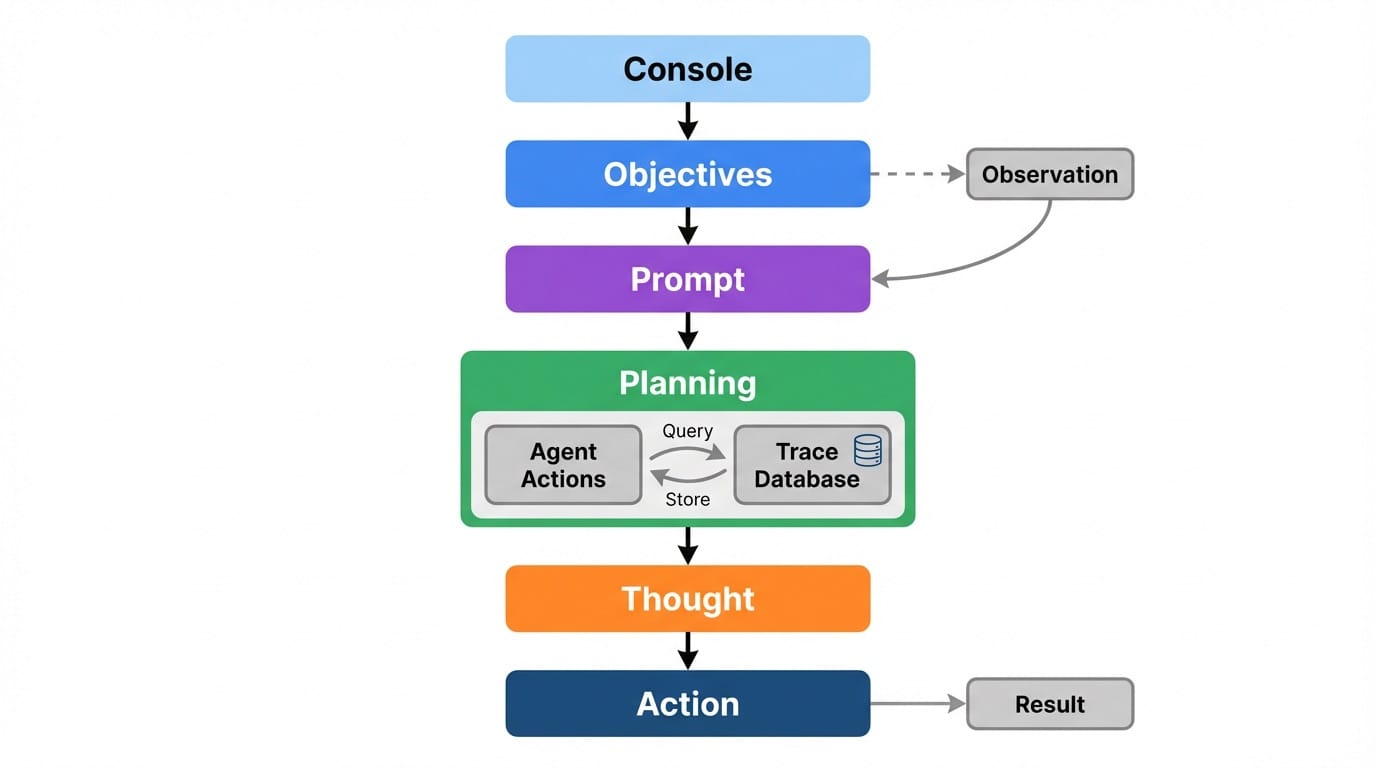

Anchor reasoning instead of free-form generation

Traditional language models generate itineraries the same way they write essays. They produce text from start to finish, guided mainly by probability.iMean AI does not work this way. It uses a structured reasoning framework designed specifically for travel planning.

This Anchor framework breaks planning into explicit stages. Intent recognition comes first. Data retrieval follows. Spatial and temporal reasoning are handled separately. Validation happens before output.

The model is not allowed to jump ahead or skip steps. Each decision depends on previously verified results. This reduces logical drift and prevents the system from contradicting itself later in the itinerary.

The output may look similar on the surface. The process underneath is completely different.

Human feedback and memory as part of the system

Many tools treat user feedback as a separate layer. iMean treats it as part of the reasoning loop.

Corrections made by users are stored. Preferences are remembered. Repeated mistakes are flagged and avoided in future planning.

Over time, this reduces the same type of hallucination from happening again. The system does not just fix errors. It learns from them.

What We Learned Along the Way

Our early assumptions were wrong.

We believed that pulling data from travel websites would be sufficient. It was not. Many real-world updates never appear on traditional platforms.

Local changes often show up first on maps, community reviews, or official notices. By expanding data sources and strengthening verification, accuracy improved noticeably.

Routes became more realistic. Timelines made sense. Long itineraries stopped collapsing under their own weight.

The system evolved through repeated failures and corrections.

Why Verification First AI Matters

The future of AI travel planning is not about writing better descriptions.

It is about respecting real-world constraints. A useful itinerary must be explainable. It must adapt to change. It must reflect reality as it exists today.

Verification-first systems may feel slower. But they produce plans people can trust and follow.

Conclusion

Travel planning demands more than fluent language. It requires fact-checking, structured reasoning, and continuous learning. At iMean AI, our goal is simple. The system should not speak until it knows.

That is how travel planning becomes reliable.